Low-light Object Detection and Instance Segmentation

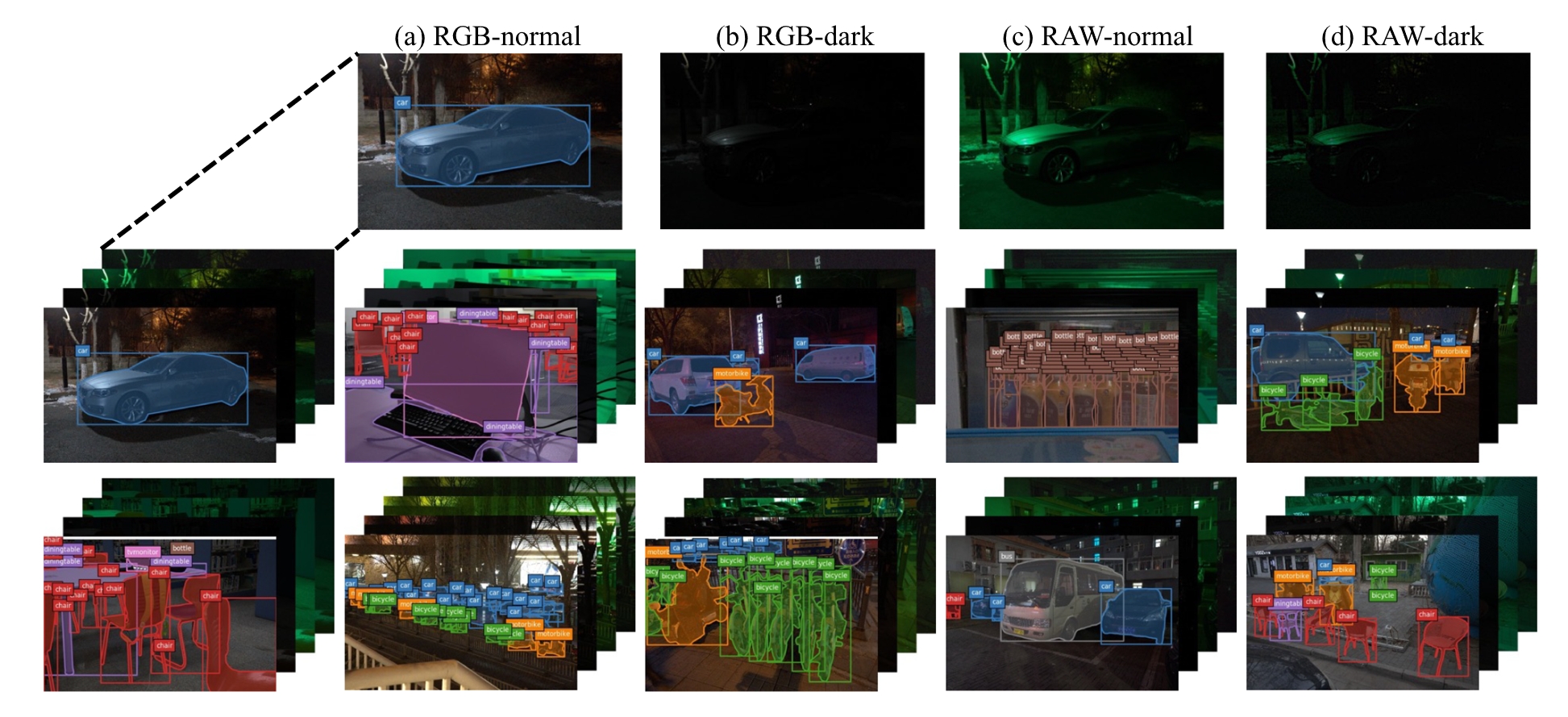

Fig.1. An example of the Object Detection and Instance Segmentation in the Dark Dataset,

Four image types (long-exposure normal-light and short-exposure low-light images in both RAW and sRGB formats) are captured for each scene

Low-light Object Detection and Instance Segmentation

In comparison to well-lit environments, low-light conditions pose significant challenges to maintaining image quality, often resulting in notable degradation such as loss of detail, color distortion, and pronounced noise. These factors detrimentally impact the performance of downstream visual tasks, particularly object detection and instance segmentation. Recognizing the critical importance of overcoming these obstacles, research into low-light object detection and instance segmentation has emerged as a pivotal area within the computer vision community, aiming to accurately localize and classify objects of interest under challenging lighting conditions.

To propel research in this field forward, it is essential to assess proposed methods in real-world scenarios, where lighting conditions and image noise are inherently more complex and diverse. Consequently, we will utilize the Low-light Instance Segmentation (LIS) dataset, introduced by Prof. Fu’s team in [a], captured using a Canon EOS 5D Mark IV camera. The LIS dataset comprises paired images collected across various scenes, encompassing both indoor and outdoor environments. To ensure a comprehensive range of low-light conditions, we utilized different ISO levels (e.g., 800, 1600, 3200, 6400) for long-exposure reference images and deliberately adjusted exposure times using varying low-light factors (e.g., 10, 20, 30, 40, 50, 100) to simulate extremely low-light conditions accurately. Each image pair in the LIS dataset includes instances of common object classes (bicycle, car, motorcycle, bus, bottle, chair, dining table, TV), accompanied by precise instance-level pixel-wise labels. These annotations serve as essential metrics for evaluating the performance of proposed methods in terms of object detection and instance segmentation. We will host the competition using open source online platform, e.g. CodaLab. All submissions are evaluated by our script running on the server and we will double check the results of top-rank methods manually before releasing the final test-set rating.